Novelist Guided Tour: Mark Alpert on the IBM Lab

By Mark Alpert

By Mark Alpert

I love visiting laboratories. I’m a science journalist as well as a novelist, and I’ve discovered that the best way to fully understand a complex scientific subject is to meet the researchers in their labs and encourage them to explain their work. So in 2011, when I was seeking story ideas for Scientific American, I arranged to get a tour of the IBM Thomas J. Watson Research Center in Yorktown Heights, New York.

Set on a low hill thirty miles north of Manhattan, the IBM lab is a legendary incubator of innovations. For instance, it’s the birthplace of DRAM circuits, the memory chips at the heart of most laptop and desktop computers. The lab is also where I got the idea for my first Young Adult novel.

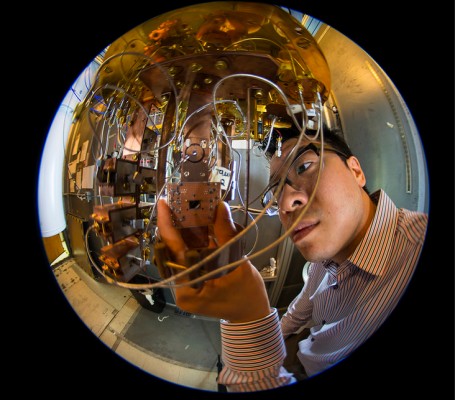

My visit was a kind of journalistic fishing expedition, because I didn’t have a particular story in mind. Mostly, I just wanted to peek into the rooms where researchers were testing new techniques such as quantum computing and new materials such as graphene and carbon nanotubes. The lab was full of brilliant scientists and engineers who were only too happy to describe their research, often in thrilling and bewildering detail. And everywhere I went I noticed big tanks of super-cold liquid nitrogen, which is used to cool the overheated electronics of ultra-powerful computers.

Perhaps the biggest thrill of all was meeting David Ferrucci, leader of the IBM team that invented Watson, the artificial-intelligence system that had become famous earlier that year when it demonstrated its mastery of the quiz show Jeopardy. Like millions of other TV viewers, I’d watched the games in which Watson soundly defeated two of the show’s all-time champions. I was stunned at how well Watson answered general-knowledge questions that were deliberately riddled with puns and sly references. If a computer system can beat humans at this kind of game, I thought, hasn’t it taken a crucial step toward humanlike intelligence?

Ferrucci gave me an overview of Watson’s hardware — ninety IBM servers stacked in ten floor-to-ceiling racks — and its equally impressive software. The system uses a variety of algorithms to decipher the Jeopardy clues and then sifts through thousands of gigabytes of miscellaneous information to come up with hundreds of potential solutions. Then Watson analyzes each possible answer to determine the probability that it’s correct. If the system judges that one of the answers is very likely to be right, Watson sends a signal to push the Jeopardy buzzer. The process doesn’t sound very much like human thinking, and yet there are similarities. For example, both Watson and the human brain use parallel processing — they divide their assigned tasks into smaller jobs and then tackle all the calculations simultaneously. The microchips in Watson’s servers work in concert, much like the corrugated cortices inside the skull.

But does the machine really understand what it’s doing? Does it grasp the meaning behind the words, or is the system simply identifying similarities and connections in its database? As I listened to Ferrucci, I sensed that the line between machine intelligence and the human variety was getting fuzzy. At the same time, an idea for a novel started percolating within my own cortices. Several parts of my brain were working in parallel — while my sober frontal lobes tried to stay on track and continue thinking of stories for Scientific American, my more imaginative neurons sparked with fantastical plots and subplots.

My novelistic calculations accelerated when I moved on to another section of the lab and interviewed Supratik Guha, director of IBM’s physical sciences research. While discussing technologies intended to make computers faster and more energy-efficient, Guha seemed most enthusiastic about “neuromorphic” electronics. These are circuits designed to act like brain cells, which work very differently from ordinary transistors.

The connections in the brain are astonishingly complex — each neuron is linked to thousands of others — and, what’s more, they constantly change their configurations. Every time we learn or remember something, our neurons make new connections and alter the old ones. IBM researchers have tried to replicate this mental architecture in their prototype neuromorphic chip; the latest version holds the equivalent of one million neurons, with 256 million programmable links among them. The researchers still have a long way to go — the human brain has about 100 billion neurons — but IBM is clearly making progress toward building a machine that can mimic the processes of human thought.

Then my imagination leaped ahead to a not-so-distant future where scientists had perfected the mechanical brain. I pictured six terminally ill teenagers who were determined to outlive their failing bodies, and a U.S. Army general eager to test whether a human intelligence could be transferred to a robot with neuromorphic circuitry. This idea gradually evolved into my YA thriller titled The Six, which was published in July. (Its sequel, The Surge, will come out next summer.) Appropriately enough, the first chapter of The Six is set in a computer-science laboratory very similar to IBM’s. And I found good fictional uses for many of the things I saw in the lab. If you want to learn how a tank of super-cold liquid nitrogen can get you out of trouble, give the book a try.

*****

Mark Alpert, a contributing editor at Scientific American, writes science thrillers for both adults and teens. His next novel for adults, The Orion Plan, will be published by Thomas Dunne Books/St. Martin’s Press in February.

Mark Alpert, a contributing editor at Scientific American, writes science thrillers for both adults and teens. His next novel for adults, The Orion Plan, will be published by Thomas Dunne Books/St. Martin’s Press in February.

To learn more about Mark and his books, please visit his website.

- Africa Scene: Iris Mwanza by Michael Sears - December 16, 2024

- Late Checkout by Alan Orloff (VIDEO) - December 11, 2024

- Jack Stewart with Millie Naylor Hast (VIDEO) - December 11, 2024